An overview of various state-of-the-art methods to achieve style and character consistency

So... you have a story you're sure will win you many Academy Awards. You've thought of the screenplay, narrative, character design, and everything else. Now, with generative AI, you can make the entire movie yourself.

Let's talk about the workflows and artifacts you'll need to create the characters, visuals, and stylings in your story-

A great way to begin generative movie projects is to start with the first frame image for the scenes. These images will guide the style and visuals of the story. You can then animate transitions, lip-sync to audio, and more using image-to-video workflows.

It's essential to enforce the cinematic theme and styling for your movie during the image generation process. You can do this roughly using fine-tuned models, embeddings, or prompt engineering. Each method comes with its own pros and cons. You can also combine prompt engineering with fine-tuned models or embeddings for even better results.

Using Fine-tuned Image Models

With fine-tuned image models, you can enforce the general styling for your project. You can either use some of the popular models fine-tuned to different styles available on websites like Hugging Face or CivitAI, or you can fine-tune your own model based on one of the open-source models available on these platforms.

Prompt Engineering for Thematic Consistency

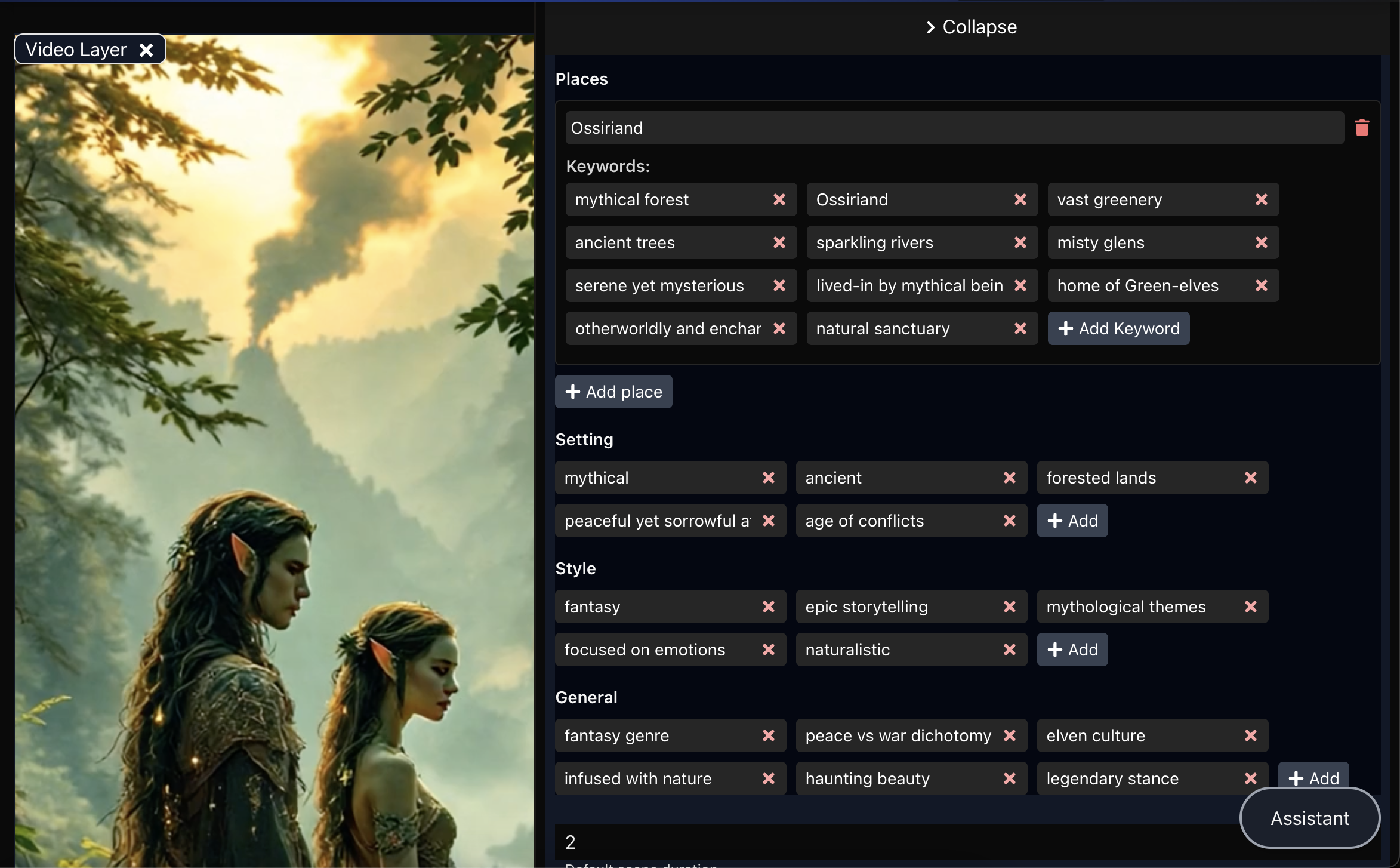

You can append theme keywords to your prompts to enforce styling, cinematics, and visuals for individual scenes or the movie as a whole.

In SamsarOne Studio, you can enforce or modify the theme using the "Places," "Setting," "Style," and "General" sections of the Theme tab. You can switch between JSON and Wizard views as you prefer.

SamsarOne also supports the Recraft family of image generation models natively which contains many fine-tuned models supporting many different styles.

Now that we’ve decided on the styling, it’s time to create our characters.

You already have the characters, theme, and style in mind. However, you may need to modify characters for specific scenes, such as changing their outfits or adjusting their appearance. This can be done by modifying keywords in the prompt when using the prompt-engineering method.

Character Consistency using LoRA

LoRA or Low-rank adaption is a method used to achieve images of characters in different poses or situations, the model being trained from a few images.

This method can be used to achieve character resemblance to a high degree of accuracy. Typically used when the exact representation is needed across multiple scenes.

On the flip side, it requires training a base model on selective images which is time consuming and needs to be redone for every set of characters which seems to not be very scalable outside of specific scenarios.

Character Consistency Using Prompt Engineering

Pros:

- Works across the board with many models.

- Can be slightly modified to suit individual scenes.

- Cost-efficient and doesn’t take much time.

Cons:

- Requires model prompt adherence and longer prompts.

- Prompt length can extend to several paragraphs, which is best managed via automated prompt generation from the storyline.

- Works best with the Flux family of models, Imagen3, and the latest versions of Stable Diffusion models.

| Method | Pros | Cons |

|---|---|---|

| LoRA |

|

|

| Prompting |

|

|

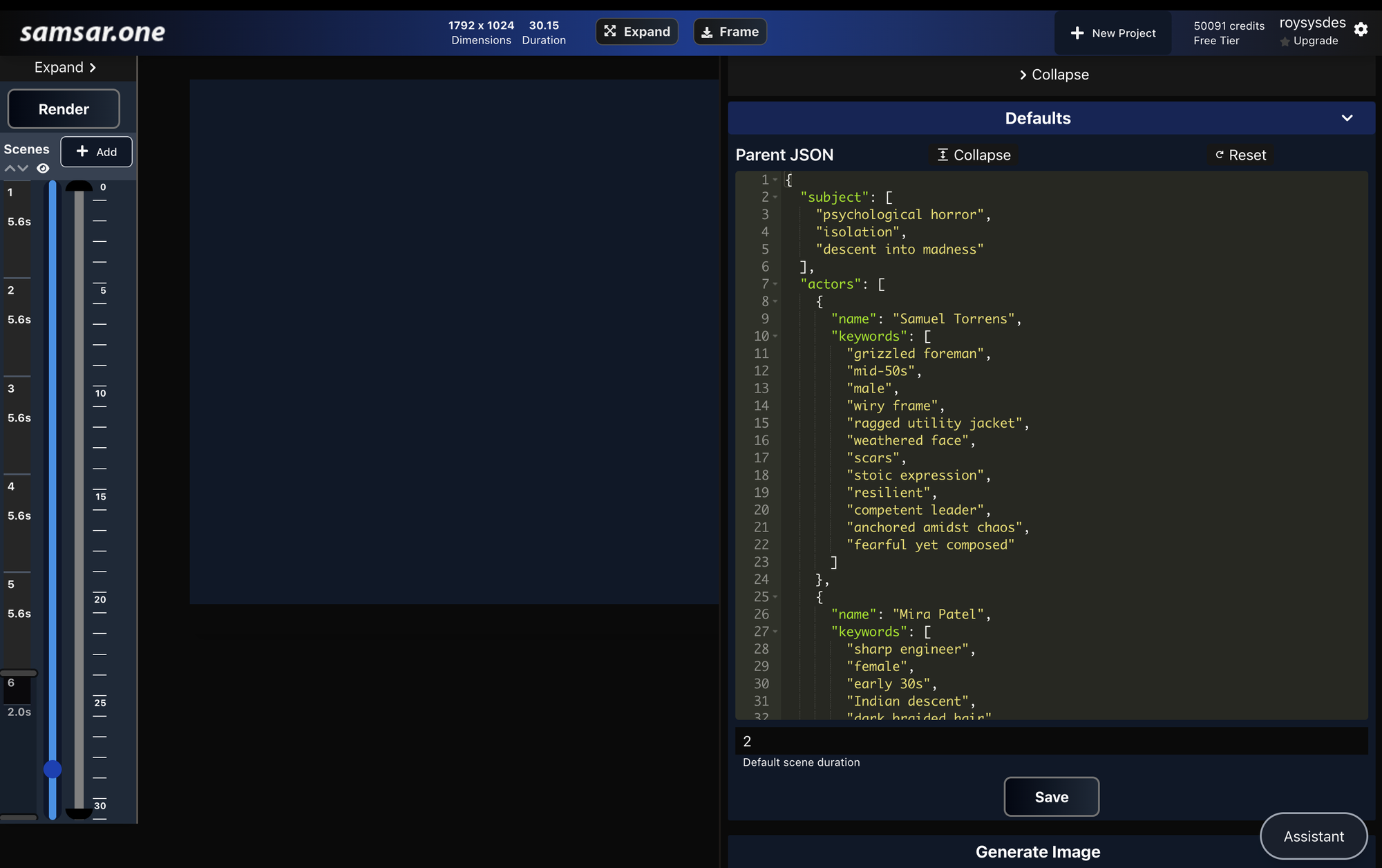

In SamsarOne studio you can can modify your image generation prompts in the "Defaults" tab . You can further switch between Wizard and JSON view.

Now that you’ve learned about character and scene consistency, go ahead and give it a whirl at app.samsar.one.

In the studio editor, enter your theme text in the Defaults section under the Advanced tab. Update the theme and character attributes, hit Save, and then enter a general prompt in the Generate Image tab to have the theme automatically applied to your project.